Interview with system testing experts Simone Gronau and Hans Quecke about typical challenges in defining system tests, and how model-based methods might offer an alternative. Interviewer: Daniel Lehner from Johannes Kepler University, Austria

As a product manager at Vector, Simone Gronau focuses on testing concepts for system tests. In particular, model-based testing accompanied her since her master’s thesis in this area.

What is a system test?

D. Lehner: Let’s start by clarifying a bit the topic that we will be talking about today: what is your understanding of a system test?

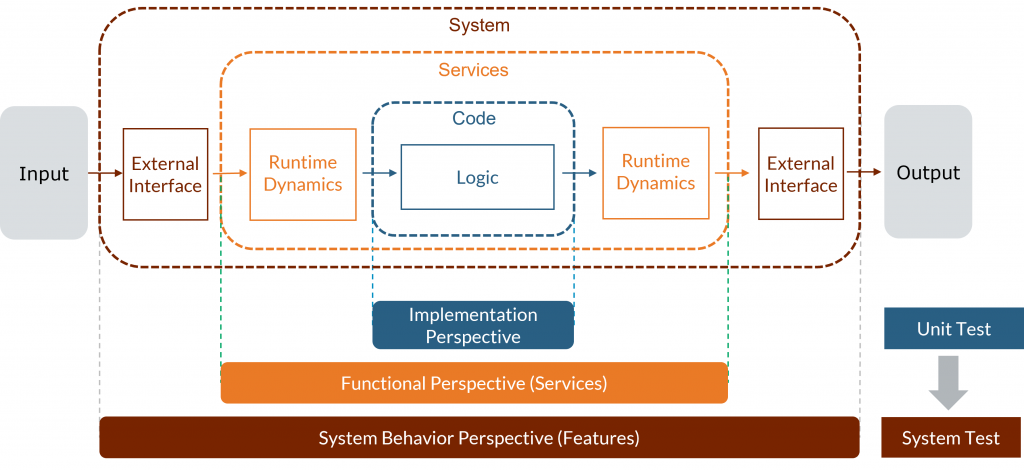

H. Quecke: Essentially, in system testing, we are testing an already or at least nearly fully integrated system. In contrast to that, integration tests are performed on some integrated parts of this system, and unit tests are executed on a decapsulated unit.

S. Gronau: I fully agree with this definition. In system testing, we want to test the behavior of an independently running system. To do so, this system needs to already provide its final external interfaces that are used in order to execute test cases on this system. These external interfaces can be simple software interfaces if we are testing a software system, various electrical, digital, or analog I/Os if we are also accessing hardware during our test, or even network interface in a case where we are testing a distributed or cyber-physical system that communicates via the internet.

What are common problems in traditional system testing?

D. Lehner: If you talk to a new customer, where do you usually see the main efforts when it comes to this kind of system testing that you have sketched out above?

S. Gronau: In my opinion, the main issue that we usually see is that system tests are not maintainable. One small change in the system usually leads to a lot of effort in order to adapt all system tests accordingly. Especially if this change introduces a new variant of the entire system or a system component, it is very hard for practitioners to analyze for which existing tests the particular change needs to be considered. Reversely, it is also very hard to grasp which system variants are already covered appropriately by test cases and which are not. Analyzing these aspects is usually a lot of unnecessary effort that people often have to go through during system testing.

H. Quecke: I’d like to add two more points that I often see customers struggling with. System testing requires a lot of communication between different stakeholders in an organization. First, there is a domain expert knowing the details of the system to be built, who works together with a requirements engineer in order to build some kind of specification of the system requirements that should be tested through system tests. A testing expert can pick this up in order to think about test cases that should be created to test these requirements. A programmer needs to transform these test cases into code, and then there might even be a dedicated person in charge of executing these tests, and verifying the result on the system.

All these people need to regularly communicate in order to successfully implement a set of system tests. For example, once a system test fails, the tester needs to explain to the requirements engineer why the test failed, to decide whether this is due to an error in the requirements, in the system, or in the test and then explain a developer how the system might need to be adapted. However, having different practical backgrounds, all these people usually don’t share a common language, a common understanding of the system to be tested, or even a common document where they collect what they are talking about. All these things create an enormous amount of overhead that people are struggling with every day.

Secondly, copy-paste is still a common practice in system testing. People often create a base test case, which they simply copy-paste and adapt for each new test case. If we now have to change one aspect in this base test case, it needs to be adapted in every test case variant that was created using copy-paste, manually. So far so good – the bigger issue here is that you might miss some of these copy-paste variants when performing the adaptation. Especially if we are talking about testing a system that includes hardware, executing all tests might take days – valuable time you lose in fixing your tests after just a small change in the system.

How can we define system tests in a model-based way?

D. Lehner: Now, we want to get some insights into using model-based methods to define system tests more effectively. So my obvious question to you is: How can I define system tests in a model-based way?

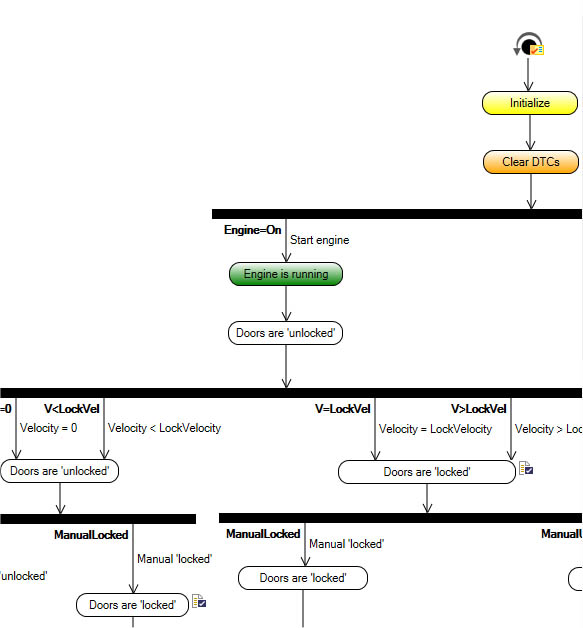

S. Gronau: Model-based testing is a way to test your system, with a focus on efficiency, maintainability, and reusability. In model-based system testing, you are usually using a graphical notation to define your tests, which has many benefits compared to traditional, code-based methods. As this notation is optimized for system tests, it serves testers as a perfect guide to define their tests most efficiently, and is definitely easier to read for other stakeholders, compared to software code. In the first step, the focus here is on abstraction. Only in the second step, the execution details are added to the so-called “test model”. Of course, at the end of the day, this test model is used to generate executable code – but the complexity of this code is hidden behind the graphical notation.

D. Lehner: To make this a bit more tangible: let’s imagine that I have created some system tests for my project. What are the requirements for these tests, so that I can call them model-based system tests?

H. Quecke: As Mrs. Gronau said, a model-based system test is developed using some sort of abstraction mechanism and a graphical notation. The main point here is that you are developing some building blocks that can be afterward put together in a plug-and-play manner to create actual system tests. The code is generated from these test models automatically in the end, but is never actually used by any human individual. This enables humans to handle the complexity of testing large systems efficiently, and also understand the final system tests, even if they’re not familiar with programming languages.

D. Lehner: Thank you for the first insights. In the next part of our expert interview, we will dive deeper into model-based system testing and how this approach reduces the efforts for maintainability.

Start with model-based system testing now

Product information: Model-based system testing with vTESTstudio

Further readings

- Reducing efforts in system testing – Second part of this interview

- Testing levels: From unit test to system test

- System testing in virtual environments

- Testing IoT systems: Challenges and solutions

- More publications from Daniel Lehner