We address the challenges that emerging cyber-physical systems pose to traditional software testing techniques. In this blog post – as first part of a series – we focus on testing the physical part of such systems, and deep-dive into the major three challenges that usually come along.

Through trends such as the Internet of Things (IoT) or Industry 4.0, software and physical devices become more and more connected. So-called cyber-physical systems enrich physical devices by establishing a connection between physical devices and software that is run in the cloud. This connection enables software systems to leverage the data omitted by physical devices, and control these devices based on the collected data. As a result, this software enriches physical systems through applications such as predictive maintenance, runtime optimization, or lot-size 1 production. Nowadays, production companies have to rely on such applications in order to stay competitive. However, engineering and testing software that interacts with physical devices involves a set of unique challenges. This is mainly due to the fact that software engineering techniques are not yet equipped for the requirements and tests of cyber-physical systems.

You shouldn’t break a physical system

When testing software, you try to break the “system under test”. One of your first learnings when testing physical systems is that it’s usually hard to “press restart”. If you break a physical device, it probably won’t work again in the future. This is okay for small test devices, but not if you test robots that cost hundreds of thousands of euros.

But how can we make sure that our cyber-physical system does not misbehave once it’s running, if we cannot do serious testing beforehand? This is where simulations come into the game. A simulation is a virtual replication of the behavior of your actual physical system. So once you formally specify the physical properties of your devices, and how certain actions change these properties, you can simulate certain actions, instead of actually performing it on the running devices. Most importantly though: as a simulation is a piece of software, there’s also no problem in breaking it. So you can start testing all the worst-case scenarios you would usually do in your software, and the simulation tells you whether your physical device would behave as expected.

However, simulations also introduce another challenge into the game. How can you make sure that a simulation correctly represents the physical device in an error setting, if you have never tested the behavior of the system in this error setting (which would break the system)? In the end, you can spend a lot of money on test equipment to test your simulations, or just accept this issue – either way, you will always have to live with a lot of uncertainty, even after thoroughly testing your code in combination with simulations of your physical system. This challenge is one of the main reasons why testing cyber-physical systems is currently really hard.

Heterogeneity in the physical world

When testing software, we usually deal with proprietary data formats imposed by the API/method we are testing. This means we have to rebuild this structure in our testing system – or simply access the internal classes that are used by the tested software.

When testing hardware, we usually have different kinds of devices from different vendors, using different programming languages, communication protocols, and data formats.

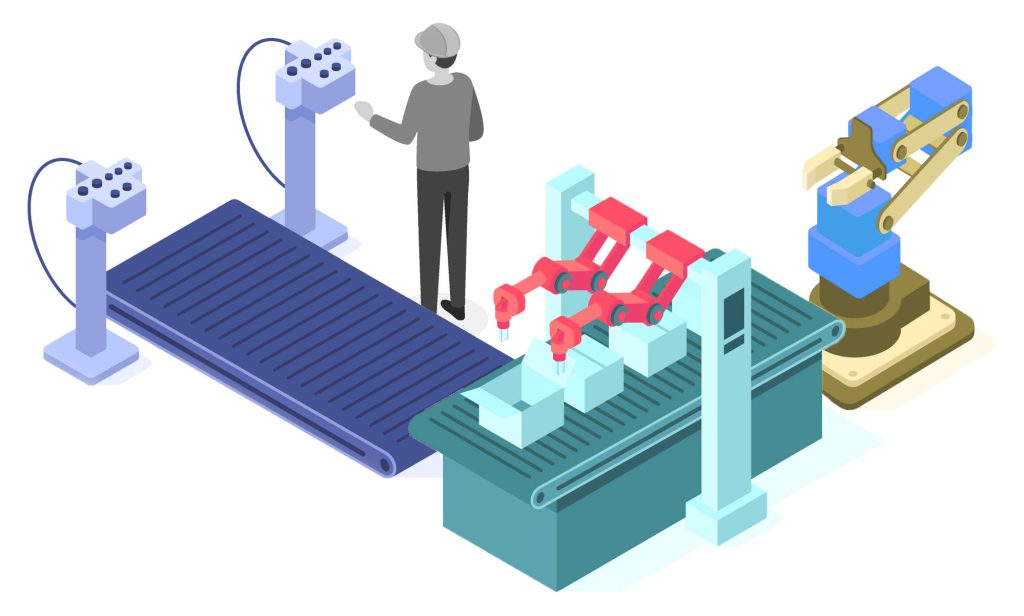

In one production line, there might be a robot from Universal Robots that picks up an item from a conveyor belt from Montech and puts it on a mobile robot from ABB. The robot from ABB transports this item to a packaging unit where it is handled by a human, together with a collaborative robot from Bosch Rexroth.

This introduces many new levels of heterogeneity, which we need to handle in order to actually execute test cases:

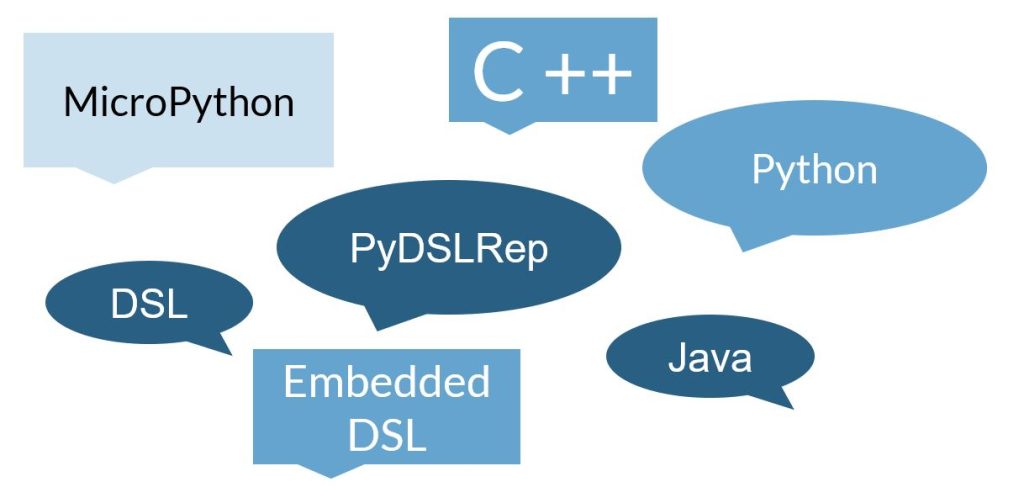

1. Different levels of code abstractions

Even though a software system might comprise services that are written in different programming languages, these languages usually operate on the same level of abstraction. The variety of devices in the physical world however also includes a variety of different programming levels. Whereas some robots are configured in a graphical domain-specific language that is optimized for use by domain experts, low-power communication endpoints might require low-level languages such as C or MicroPython, and software running on middleware with higher computational power might be written in high-level general-purpose languages such as Python, Java or C#.

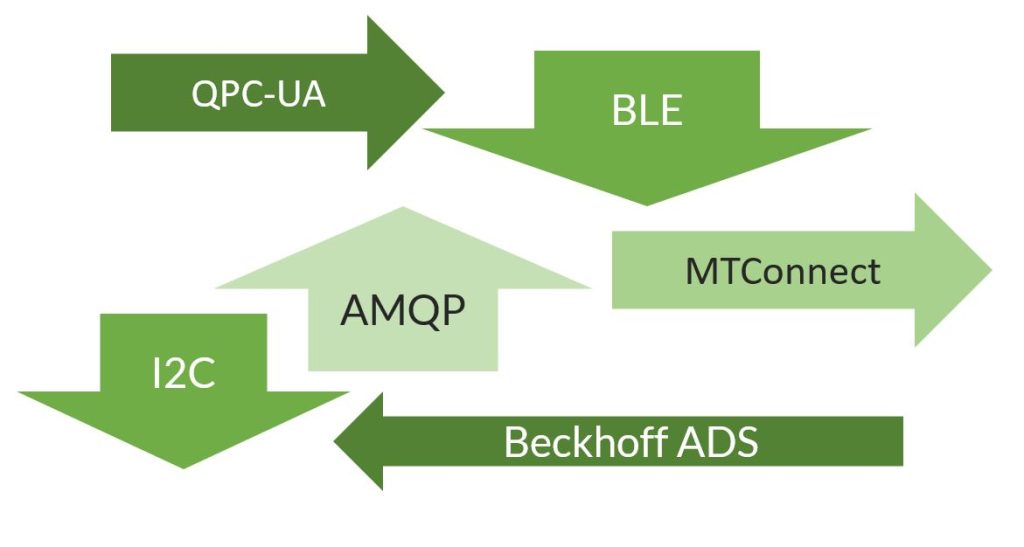

2. Different communication protocols

The communication between these devices introduces the next level of heterogeneity. In software systems, communication between different programming languages is usually performed using a standardized protocol such as REST or MQTT. In the physical world, however, there are so many different standards, that you have to test many different variants, which makes your test code pretty complex. To make things even more difficult, there are also different standards for the simulations you need when testing these systems.

3. Different exchange formats

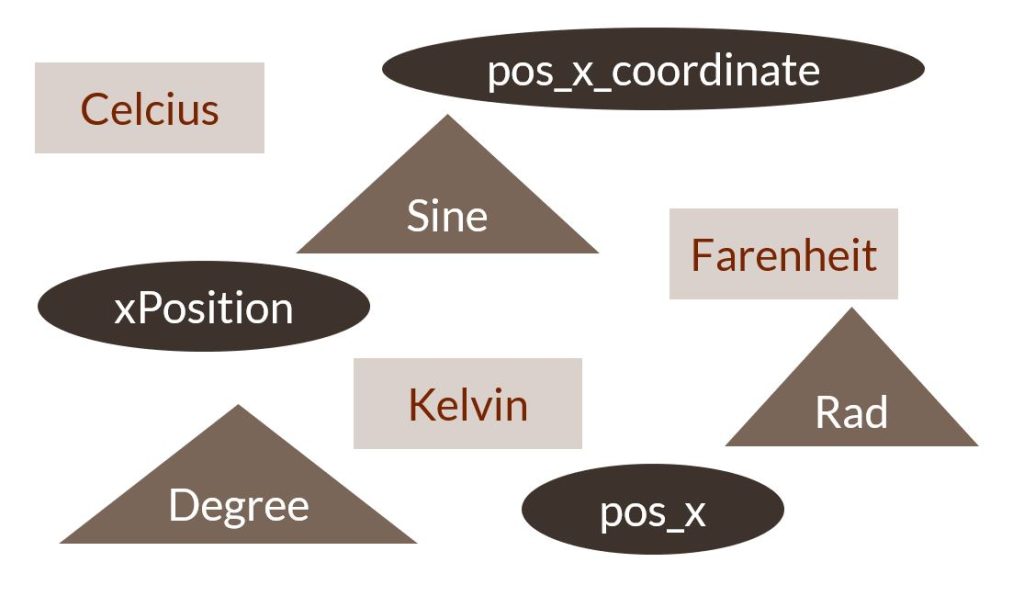

The hard part actually starts once we introduce the fact that all the different devices used in a physical system are also from different vendors. Usually, each of these vendors uses its own way of describing data that is omitted or received by its devices. In a system that combines devices from different vendors, this introduces a lot of heterogeneity. This starts with using different names for the same things. For example, if you want to communicate the current position of an item between two devices, the sending device might send a single property named “pos” which contains pos_x_coordinate, whereas the sending device might expect three different properties called xPosition. Or using different concepts for describing the same properties (e.g., distances might be measured in the metric system in one device, but required the imperial system by another device). Coping with this heterogeneity is particularly hard, because you usually execute tests on a dedicated computing unit, which means that you have to access the device code via external interfaces. Thus, you don’t have access to the internal code structure of the used exchange formats. Instead, you have to manually rebuild them within your test cases.

Standardization to handle these challenges

Handling these levels of heterogeneity requires a significant amount of work that needs to be performed to reasonably test a physical system. Until now, the only way to reduce this effort is to establish company-wide standards for exchange formats and communication protocols. By implementing these standards in each programming language used in a particular cyber-physical system, and mapping the standards to the proprietary communication protocols and exchange formats of individual system parts, test cases can rely on these standardized representations. This releases us of the work for handling proprietary information – we can instead use the implemented standards as a single source of information. Cross-company standards would even encourage vendors to provide mappings of their proprietary protocols to these standards – so no need to develop these mappings ourselves. Several consortia are already trying to establish such commonly accepted standards. These consortia range from the “Plattform Industrie 4.0” and “Internet of Production – Cluster of Excellence” in Germany to worldwide groups such as the “Internet of Production Alliance” or the “Object Management Group”. However, the pure range of heterogeneity in cyber-physical systems places many challenges. Thus, actually establishing such standards still requires a significant amount of work by the above-mentioned consortia.

Testing cyber-physical systems: next steps

In this article, we have given you a first overview of the complexity that testing physical systems implies. Now we can begin to improve existing software testing methods to handle this complexity, making it easier to maintain a high level of quality in the products you develop.