Software used to be seen as something that could be written once and used many times without ever “breaking down.” However, that illusion faded when problems began to appear. Problems which are ultimately caused by continual development without the correct quality control processes in place. You know about the problem to release new products and features in a compressed time-to-market schedule due to incredible business pressure.

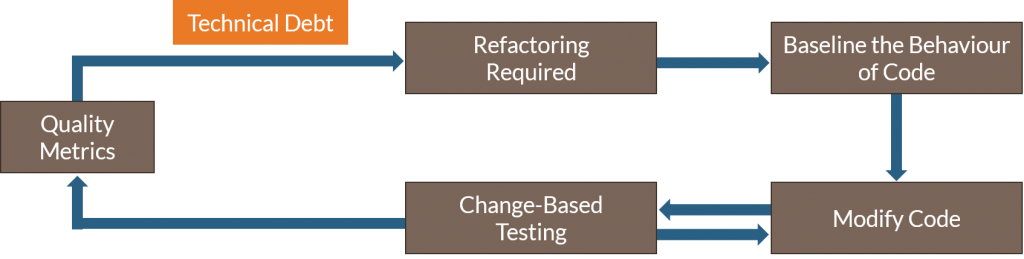

These issues result in software applications carrying an enormous amount of technical debt. It’s the metaphor for the latent defects introduced during system architecture, design or development. The accumulated liability created when organizations take these design and test shortcuts, in order to meet short-term goals, eventually makes software difficult to maintain. What’s the consequence of increasing technical debt? You spend a greater majority of your time fixing bugs and struggling with fragile code, rather than building new features.

Your key to reducing technical debt is to refactor components over time. This is the process of restructuring an application’s components without changing its external behavior. But your developers will often be hesitant to do so for fear of breaking existing functionality. One of the biggest barriers to refactoring is the lack of tests that characterize the existing behavior of a component.

Technical debt concerns multiply in an IoT-enabled world

With the advent and growing prevalence of Internet of Things (IoT), this has led to the problem of technical debt becoming more acute. Previously, when systems were self-contained and with low connectivity, it was possible to keep technical debt relatively isolated. However, with IoT-enabled devices, not only is the sheer number of systems increasing, but technical debt becomes compounded. The technical sacrifices in individual devices themselves were not a problem, but taken a whole, the issue is much more apparent.

By definition, every IoT-enabled electronic device will have network connectivity. This means that every manufacturer of electronic devices is then also active in the software business to some degree. This expands the scope of responsibility into new platforms and services, as well as introduces a demand for predictable behavior. Even more, if the safety of users or the environment is at risk. However, in a fiercely competitive industry such as IoT, the first-to-market advantage is huge. You as a developer will be under intense pressure to get products released quickly. We only have to look at Amazon’s and Microsoft’s expansion into IoT or Bosch’s IoT Suite to appreciate how mainstream this is becoming.

It has been proven that this thinking sacrifices quality for speed. This trade-off can be dangerous with regard to many IoT-enabled products such as smart cars, connected medical devices and home safety systems. Malfunction of these systems can put lives at risk.

When consumer-grade becomes safety-critical

There has also been a shift driven by IoT that has resulted in a new generation of software. Previously it did not have safety-critical requirements but now does. For example, as we move into the era of connected and autonomous cars, automatic emergency braking systems are controlled by safety-critical software. This software powers cameras, radar, proximity sensors and more that all need to operate flawlessly in order to safely stop a vehicle if a driver is slow to respond. The embedded camera that was previously used for driver assistance (parking for example) will now be part of this safety-critical system. As these software-driven systems migrate from consumer-grade to safety-critical applications, faulty software has severe ramifications. Quality is no longer an option – it is a necessity.

Characterising the behaviour of software – baseline testing

A lack of sufficient tests typically means that a software application cannot be easily modified. Changes can frequently break existing functionality. Consequently, when you modify a unit of code and some existing capability is then broken, you need to understand the reason for the failure:

- Was the original software written incorrectly?

- Do you miss a requirement that wasn’t adequately captured originally?

- Or was it due to a modification you newly introduced?

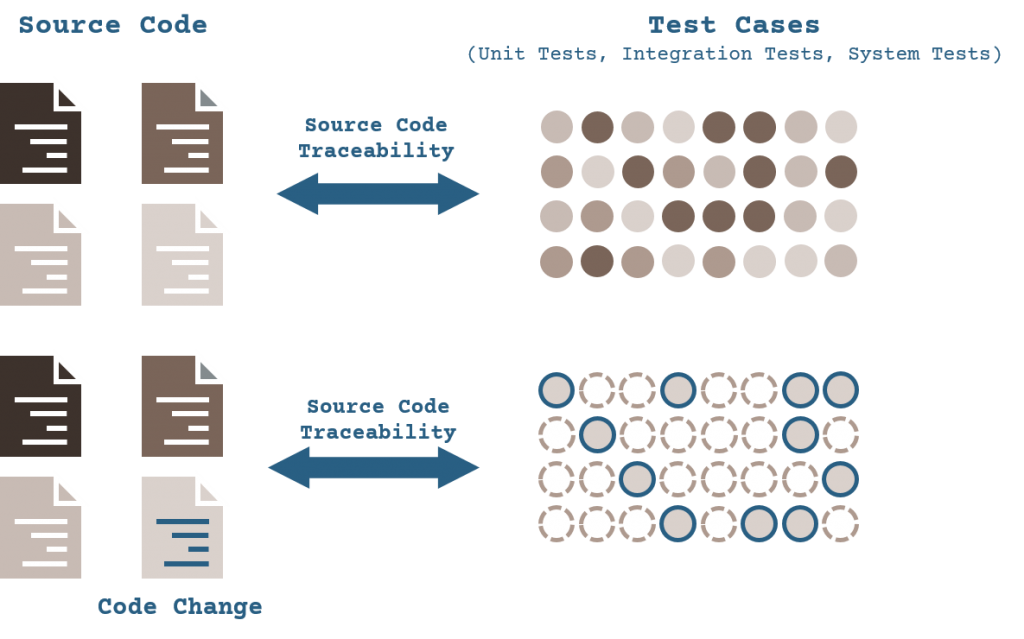

Baseline testing, also known as characterization testing, is useful for legacy code bases that have inadequate test cases. It is very unlikely that the owners of a deployed application, without proper testing, would ever go back to the beginning and build all of the test cases required. However, because the application has been used for some time it is possible to use the existing source code as the basis to build test scenarios. By using automatic test case generation you quickly provide a baseline set of tests that capture and characterize existing application behavior.

While these tests do not prove correctness, they do encapsulate that the application does today. Based on that automatically constructed regression suite you can ensure that future changes, updates and modifications are validated not to break existing functionality. As a result, test completeness of legacy applications is improved. Refactoring can be done with confidence.

Paying off technical debt – Change-based testing

Once you have characterized the behavior of the software through baseline testing, you can begin making updates and modifications to the code. To further automate the continuous integration and testing processes, you may use impact analysis in the form of change-based. This means you only run the set of test cases that demonstrate what effect code changes have on the integrity of the whole system. It is not uncommon for a company to take weeks to run all of its test cases. But with change-based testing, you can make a code change and get feedback on its impact to the entire application within minutes. As a result, you are able to make quick, incremental changes on the software. knowing that they have the test cases needed to capture the existing behavior of your software.

You are also able to do further analysis:

- Is something broken because an error has been introduced?

- Has capability been removed that actually should be there?

- Is there a bug that should be addressed because it may have other ramifications?

Conclusion

Baseline testing formalizes what an application does today. You can validate future changes to ensure that existing functionality is not broken. With change-based testing you run only the minimum set of test cases needed to quickly show the effect of code changes. This enables the characterization of test cases to be integrated into a Continuous Integration/Delivery style deployment.

In an IoT-enabled world, a great amount of legacy code will find its way onto critical paths in new applications. Without proper software quality methods in place to ensure the integrity of this legacy code, the overall safety of the system may be compromised.

Baseline testing combined with change-based testing can help you to reduce technical debt in existing code bases effectively and allows you to refactor and enhance with confidence.